Over the last 3 years, I have been working with MySQL almost every day. Even though non-relational databases like MongoDB are gaining more and more popularity every year, traditional SQL solutions are still widely used for many purposes. In this post, I will share some of my tricks I have been using to make my life easier. Note: most of those tips apply only to development machines, for production you should take more care.

1. Run MySQL in Docker

Seriously, in 2018 there is absolutely no need to run MySQL server natively by installing it, setting users and passwords, performing upgrades etc. You are wasting your client’s time if you are still doing it. Just use the sample Docker Compose file as a working starting point:

version: '3'

services:

mysql:

image: mysql:5.7

environment:

- MYSQL_ROOT_PASSWORD=root

- MYSQL_DATABASE=myproject

ports:

- 127.0.0.1:3306:3306

volumes:

- ./varlibmysql:/var/lib/mysql

After docker-compose up, you will have a working server with localhost-only port bound on standard port 3306, one user root/root and a pre-created „myproject” database. Throw in restart: always into Compose file if you want to keep the server running across reboots. That is enough for 95% of software projects, this solution is completely disposable and easy to recreate.

Note: I still have MySQL client installed natively on my development machine. Technically speaking, there is a way of avoiding this too and running the client itself from Docker image, but that is a matter of preference.

2. Store MySQL data in RAM

In Docker world, the best practice for handling data storage is to store it outside of the container filesystem – in case of a development machine, in some mounted directory on the host. A cool trick, at least on Linux, is to create a RAM-based filesystem called tmpfs and use it as data storage for MySQL. This will, of course, result in data loss after machine restart, but who cares about development data? If it is important, you should have a recent and verified backup anyway, right?

In my case, I am mounting a folder /tmp/varlibmysql into container /var/lib/mysql, since I am using ramdisk for the whole temporary directory to limit SSD wearing. So the relevant part of Compose file is:

...

volumes:

- /tmp/myproject/varlibmysql:/var/lib/mysql

...

There is a noticeable performance gain with this configuration: I measured the time it takes to run a few hundred Liquibase migrations on application startup and the time it takes to import ~1GB database dump.

- migrations: SSD 0:44, ramdisk 0:07 – huge speedup

- import: SSD 5:23, ramdisk 4:14 – small but noticeable speedup

Of course, this requires a sufficient amount of RAM to be available, in my case an 800 MiB uncompressed dump of application data requires 1.7 GiB of binary MySQL storage. But according to metrics I check sometimes, in a typical day over half of my machine’s RAM stays unused, so why not make good use of it?

3. Manage database dumps like a boss

I personally dislike edge case bugs, which often appear in web applications. One of the ways for a tester to describe such rarely occurring bug is to provide a list of bazillion intricate steps, that have to be carefully performed in order for the bug to appear. It is much easier to create a bug report with a database dump attached, which contains the whole state of the application. As a result, explaining and more importantly reproducing a bug is much easier and faster. However, if every time this happens there is a need to go to StackOverflow to recall mysqldump syntax, no one will want to do this. So let’s fix the issue once and for all:

$ cat export.sh

#! /bin/bash

set -e

DATABASE=myproject

if [ "$#" -ne 1 ]; then

echo "Usage: export.sh <filename.sql.bz2>"

exit 1

fi

echo "Exporting to $1..."

mysqldump --protocol tcp -h localhost -u root -proot ${DATABASE} \

| pv \

| bzip2 > "$1"

echo "Export finished."

$ cat import.sh

#! /bin/bash

set -e

DATABASE=myproject

if [ "$#" -ne 1 ]; then

echo "Usage: import.sh <filename.sql.bz2>"

exit 1

fi

echo "Importing from $1..."

bzcat "$1" \

| pv \

| mysql --protocol tcp -h localhost -u root -proot ${DATABASE}

echo "Importing finished."

$ cat drop.sh

#! /bin/bash

set -e

DATABASE=myproject

echo "Dropping and recreating ${DATABASE} ..."

mysql --protocol tcp -h localhost -u root -proot ${DATABASE} \

-e "drop database ${DATABASE}; create database ${DATABASE};"

echo "Done."

These are 3 scripts I use every day for SQL export, import and one extra for recreating a database for testing purposes. Those scripts use bzip2 compression for minimum file size and pv tool for visualising data flow.

4. Log executed queries

Recently I have been fixing some performance problems in one of our projects. Our business contact reported that „this specific webpage is slow when there are lots of users present”. I started looking around in Chrome Developer Tools and it became clear that the issue is on the backend side, as usual… I could not see any obvious bottlenecks in Java code, so I went a layer down into the database and yep, there was a performance problem there – some innocent SQL query was executed thousands of times for no reason. In order to debug such cases, query logging is a must, otherwise we are shooting in the dark.

You can enable basic query logging using those commands in MySQL console:

MySQL [myproject]> SET global general_log = 1; Query OK, 0 rows affected (0.00 sec) MySQL [myproject]> SET global log_output = 'table'; Query OK, 0 rows affected (0.00 sec)

From now on, all queries will be logged in special table mysql.general_log. Fun fact – this table is actually a real physical table, it can be searched, exported etc. – good for documenting bugfixes. Let’s create some sample database structure:

MySQL [myproject]> create table random_numbers(number float);

Query OK, 0 rows affected (0.00 sec)

MySQL [myproject]> insert into random_numbers values

(rand()), (rand()), (rand()), (rand());

Query OK, 4 rows affected (0.00 sec)

Records: 4 Duplicates: 0 Warnings: 0

And now run a few queries and see if they are captured in the log:

MySQL [myproject]> select * from random_numbers where number < 0.1; Empty set (0.00 sec) MySQL [myproject]> select * from random_numbers where number < 0.5; +----------+ | number | +----------+ | 0.254259 | +----------+ 1 row in set (0.00 sec) MySQL [myproject]> select * from random_numbers where number < 0.9; +----------+ | number | +----------+ | 0.777688 | | 0.254259 | +----------+ 2 rows in set (0.00 sec) MySQL [myproject]> select event_time, argument from mysql.general_log; +----------------------------+-------------------------------------------------+ | event_time | argument | +----------------------------+-------------------------------------------------+ | 2018-11-26 12:42:19.784295 | select * from random_numbers where number < 0.1 | | 2018-11-26 12:42:22.400308 | select * from random_numbers where number < 0.5 | | 2018-11-26 12:42:24.184330 | select * from random_numbers where number < 0.9 | | 2018-11-26 12:42:28.768540 | select * from mysql.general_log | +----------------------------+-------------------------------------------------+ 4 rows in set (0.00 sec)

Perfect! Keep in mind that „all queries” means all of all, so if you are using graphical database tools for viewing query logs, those „query querying logs” will be also there.

5. Use remote servers

MySQL protocol runs over TCP/IP (note to nitpickers: yes, it can also work through UNIX sockets, but the principle is the same). It is perfectly fine to use some remote MySQL server/service for local development instead of a local server. This is useful for working with big databases that would not fit onto tiny laptop SSD, or if we need more performance. The only concern is network latency, which can sometimes diminish any performance gains – but I think it is still a useful trick.

Let’s try Amazon RDS. It is pretty expensive for long-term usage but affordable for one-off tasks. Graphical setup wizard in AWS web console is pretty easy to use so I will not cover it here in full, however, keep attention on:

- DB instance class – pick the cheapest one for start, you can always upgrade it later if needed

- Allocated storage – the minimum (20 GiB) is actually a huge amount of space for a typical project, but you can increase it now if you need

- Username and password – pick something secure, because our instance will be publicly visible from the Internet

- Security group – pick/create a security group with full access from the Internet

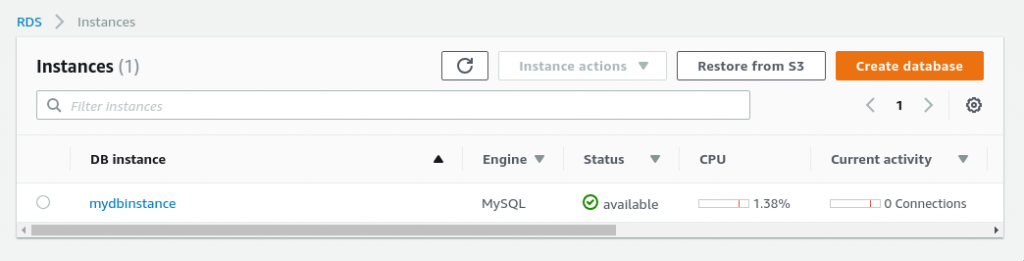

After a while, our instance should be running and available.

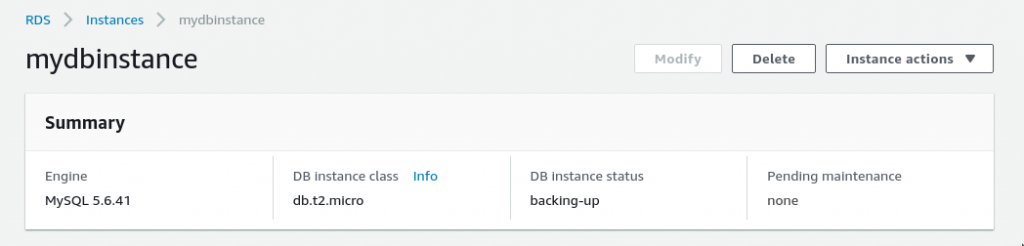

We can look up its details:

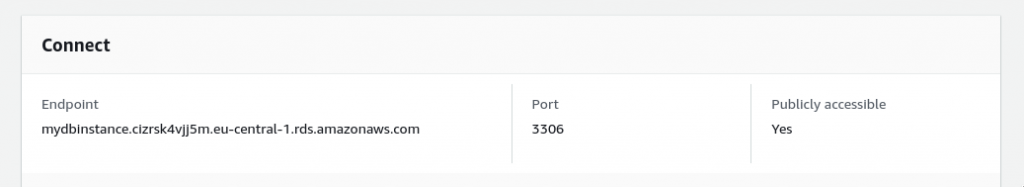

And finally, find the server hostname to connect to…

…like usual:

$ mysql -uroot -pmysqlletmein --protocol tcp \ -h mydbinstance.cizrsk4vjj5m.eu-central-1.rds.amazonaws.com Welcome to the MariaDB monitor. Commands end with ; or \g. Your MySQL connection id is 15 Server version: 5.6.41-log Source distribution Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. MySQL [(none)]> select rand(); +--------------------+ | rand() | +--------------------+ | 0.8418013517202823 | +--------------------+ 1 row in set (0.06 sec)

Now we can configure our backend to use it and it should work exactly like a local server. Amazon RDS gives us some useful development features like real-time monitoring, automatic backups, spinning new database instances from snapshots and much more. Go try it!

6. Learn some SQL!

Finally, even if you love ORMs and you can’t live without Spring Data and sexy dynamic finders (which can sometimes be long enough to wrap on 4k screen), it is very beneficial to learn at least some SQL to understand how everything works underneath, how ORMs are mapping one-to-many and many-to-many relationships with the use of extra join tables, how transactions work (very important in high load systems) and so on. Also, some kinds of performance problems („N+1 select” being the most common) are completely undiscoverable without knowing how underlying SQL is generated and subsequently executed.

And that is all. Thanks for reaching this point and I hope you’ve learnt something.