When over a year ago, together with several other programmers from our team, we participated in HackJam. We decided to tackle the topic of artificial intelligence in game development. We were looking for an idea that would allow us to use AI in a less obvious way than just generating graphics or audio tracks (although we also took advantage of these possibilities).

We ultimately decided to create a prototype of a game with environmental puzzles, where the central role was played by an AI-powered narrator. In real-time, it commented on the player’s actions, adjusting the tone and style of speech to the gameplay flow. Thanks to this, each session was a unique experience, and the story differed depending on the decisions made.

The project was created under typical game jam conditions – quickly, chaotically, and very experimentally – but it showed us something important: AI doesn’t have to replace humans to make an impression. The greatest value appears when technology becomes a support for creators’ creativity.

Since then, the industry has made a huge leap forward, and AI applications in game development have evolved in directions we didn’t even think about back then. In this article, I’d like to present several unconventional AI applications in game dev that go a step further than typical content generation.

Current uses of AI in Game Dev

Motion Matching – Revolution in Character Animation

One of the most impressive breakthroughs is the motion matching system, best seen in The Last of Us Part II. Instead of traditional, rigid transitions between animations, AI analyzes thousands of motion frames and selects the most natural sequences in real-time. Characters move like real people, smoothly reacting to every terrain change or sudden direction shift.

Ubisoft went even further with the Learned Motion Matching system, which not only selects animations but also generates new ones based on existing data. This is no longer playback of ready-made sequences – it’s AI that actually “understands” movement biomechanics and creates something new on the fly. The result? Animations that look natural in every situation, without artificial “gluing” between movements.

Ubisoft and AI in Code Analysis

Equally fascinating – though less spectacular – is Ubisoft’s approach to using AI in the actual game creation process. Their system automatically analyzes every code commit and flags potential problems at the development stage. It’s like having an experienced senior developer constantly looking over your shoulder and warning about potential bugs, performance issues, or logical errors.

In practice, this means fewer crunches, faster development cycles, and – most importantly – fewer frustrating bugs in the final version of the game. This is AI serving developers, not players, but everyone feels the effect.

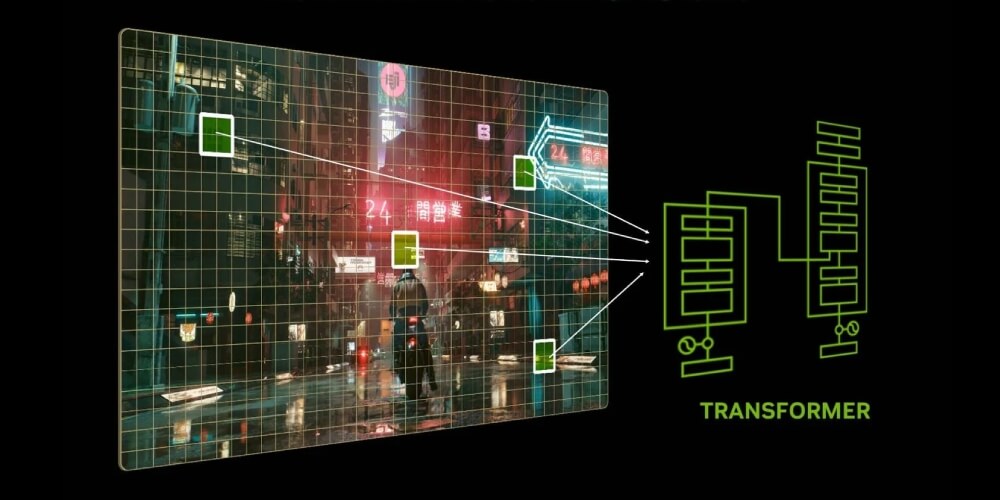

DLSS 4.0 – Revolution in Rendering

DLSS version 4 is no longer just image upscaling – it’s a completely new AI model that generates additional frames and significantly improves performance in games. The technology allows players to achieve high frame rates even in the most demanding titles while maintaining image quality. For mid-range card owners, this is often the only way to comfortably play the latest titles in high resolution.

The problem is that DLSS has become too convenient an alternative to proper optimization. Instead of improving code and optimizing games, developers often simply lower rendering resolution and let DLSS do the rest. It’s like using filters instead of caring for good photography. The effect might be similar, but the underlying problems remain unsolved. DLSS works great as an addition to a well-optimized game, but shouldn’t be a pretext for lazy approach to game development.

NVIDIA Neural Texture Compression – Salvation for Low VRAM Cards

One of the biggest problems with popular mid-range graphics cards is limited VRAM memory. When games require more and more memory to store textures, cards with 8GB or even 12GB VRAM quickly reach their limits. NVIDIA introduces Neural Texture Compression technology, which could be the answer to this problem, reducing memory usage by up to 90% while maintaining texture quality.

AI learns to compress textures in ways that traditional algorithms would consider impossible. In practice, this means significantly more detailed game worlds on cards that previously struggled with VRAM shortages. The technology already works on older graphics cards – from the RTX 4000 series and up – showing that AI in game development isn’t just a toy for owners of the most expensive hardware.

Text-to-Speech and Ethical Dilemmas

Contemporary text-to-speech systems have significantly improved, though they can still be distinguished from real actors with careful listening. Cyberpunk 2077 showed a very balanced and ethical approach to this technology, using AI to generate missing lines after the death of one of the voice actors – with full consent and cooperation from the artist’s family.

The problem may arise in the future when studios start using actors’ voices without their consent or appropriate compensation. At the same time, the technology opens doors to game localization on an unprecedented scale – instead of hiring hundreds of actors in each country, a studio can train AI on a few voices and generate natural dialogues in dozens of languages. This is democratization of access to games on a global scale.

NVIDIA ACE – Future or Proof of Concept?

NVIDIA ACE enables creating NPCs capable of conducting natural conversations with players. Each conversation can be unique, every question receives a contextual response. NVIDIA has already shown demos in PUBG, where AI-partners communicate with players like real teammates.

Although the technology is impressive, questions remain about its practicality. Do players actually want such realistic NPCs? Sometimes simplicity and predictability have their charm. ACE could be a breakthrough, but it might equally well turn out to be an expensive technological curiosity.

Next Level – What Awaits Us?

Just as image generation by AI is now commonplace, and AI-created films are reaching the next level of quality, the currently nascent creation of 3D models by AI will probably follow a similar path. Today we can already generate basic 3D models from text descriptions, but this is just the beginning of this revolution.

Perhaps we’re facing a completely new rendering paradigm based on Gaussian Splats? But that’s a topic for a completely different story that deserves its own article.

The Future of AI in Game Development

Jensen Huang from NVIDIA predicts that by 2030 we’ll see the first completely AI-generated games. Google is already experimenting with AI that renders pseudo-games generated like movies, but with world continuity and player input. However, I think this is a dead end – the future lies rather in using AI as a tool in the game creation process.

Contemporary game development is an increasingly longer and more expensive process. Some AAA games are produced for 5-7 years, costing hundreds of millions of dollars. AI can help shorten these cycles by automating tedious tasks and allowing developers to focus on what’s really important – creativity and innovation.

It’s important to remember, however, that AI should be a co-creator, not a competitor to humans. The best results arise when technology supports developers’ creativity rather than replacing it. Ultimately, it’s humans who give games soul and emotions – AI just helps them do it faster and more efficiently.